DALL-E: As Viewed Through an Ethical Perspective

Based on natural language processing, DALL-E converts text descriptions into images. OpenAI created DALL-E in 2021. DALL-E has two versions – DALLE-1, where the images were grainy and of inferior quality, and the new DALLE-2, with much-improved image quality. DALLE-2 not only recognizes individual images, but also shows the connection between/among consecutive images.

DALL-E 2 is not yet public, so its reach remains limited for now. But it will not be for long. Notably, this technology has enormous potential to disrupt industries and jobs, as well as our understanding of human creativity and art.

The origin of DALL-E emerges from two words – Salvador Dali, the famous Spanish surrealist artist, and WALL-E, Pixar’s animated movie based on robots. Henrik Ibsen, a Norwegian playwright wrote in 1906 “A picture is worth a thousand words”. Inspired by it, what DALL-E does is the exact opposite. It creates innumerable visuals based on a limited textual description.

DALLL-E has three main functions for creating images:

- Create images from a text prompt;

- Create variations of an image;

- Edit an image, generating new parts from a prompt.

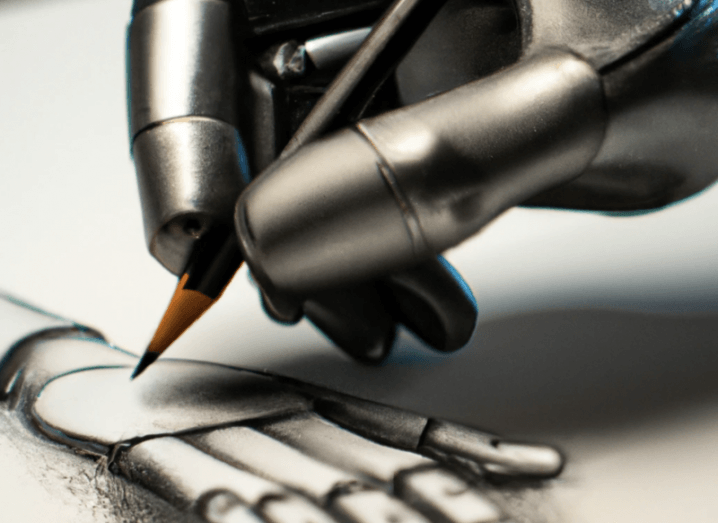

A creative tool.

DALL-E inspires and accelerates the creative process for artists and creative professionals. People have been using DALL-E to make music videos for young cancer patients, create magazine covers, and bring novel concepts to life.

Is AI going to change our relationship with art? Are models like DALL-E 2 just the beginning of an ever-improving form of technology that will eventually surpass our creativity? These questions point to an impending cultural shift that will go as far as to turn upside down our understanding of what it means to be human.

Shortcomings.

The most important one is that it DALL-E does not understand that the objects it paints are a reference to physical things in the world. If you ask DALL-E 2 to draw a chair, it can do it in all colours, forms, and styles. And still, it does not know we use chairs for sitting down. Art is only meaningful in its relationship with either the external physical world or our internal subjective world — and DALL-E 2 does not have access to the former and lacks the latter.

DALL-E has minimal generalization capabilities. Like any other deep learning model (or, more broadly speaking, any AI), DALL·E 2 can, at best, extrapolate within the training set distribution. This means DALL-E cannot understand the underlying realities in which its paintings are based and generalize those into novel situations, styles, or ideas it has never seen before.

This image takes a literal description.

It has racial bias and gender bias. For example, the word ‘Executive Head’ has shown more images of men than women, and the word ‘Offender’ shows more images of people of colour. Though violent images and graphic sexual content are removed from the database, it still has to work a lot to ensure that the platform in no way disturbs people mentally and emotionally and does not go against basic human rights.

Scope for Artists.

DALL-E provides space for artists where they can earn credit. However, artists working with DALL-E should be provided with appropriate training where they can be as unbiased as possible. Though numerous images can be created, we need to ensure that no race, ethnicity or gender is hurt. So, it would be best to not make the platform open before all artists but to appoint artists and give them training to learn to be unbiased and remember to represent humans from diverse backgrounds.

About the Author.

Anwita Maiti is an AI Ethics Researcher at deepkapha AI Research Lab. She hails from a background in Humanities and Social Sciences. She has obtained a PhD. in Cultural Studies.