Administrative Information

| Title | Derivation and application of backpropagation |

| Duration | 60 |

| Module | B |

| Lesson Type | Tutorial |

| Focus | Technical - Deep Learning |

| Topic | Deriving and Implementing Backpropagation |

Keywords

Backpropagation,activation functions,deviation,

Learning Goals

- Develop an understanding of gradient and learning rate

- Derive backpropagation for hidden and outer layers

- Implimenting Backpropagation unplugged and plugged using different activation functions

Expected Preparation

Learning Events to be Completed Before

Obligatory for Students

- Calculus revision (derivatives, partial derivatives, the Chain rule)

Optional for Students

None.

References and background for students

- John D Kelleher and Brain McNamee. (2018), Fundamentals of Machine Learning for Predictive Data Analytics, MIT Press.

- Michael Nielsen. (2015), Neural Networks and Deep Learning, 1. Determination press, San Francisco CA USA.

- Charu C. Aggarwal. (2018), Neural Networks and Deep Learning, 1. Springer

- Antonio Gulli,Sujit Pal. Deep Learning with Keras, Packt, [ISBN: 9781787128422].

Recommended for Teachers

None.

Instructions for Teachers

- This tutorial will introduce students to the fundamentals of the backpropagation learning algorithm for an artificial neural network. This tutorial will consist of the derivation of the the backpropagation algorithm using pen and paper, then the application of the backpropagation algorithm for three different hidden layer activation functions (Sigmoid, Tan H and ReLu), using Python with only the Numpy library (for matrices manipulation) and then using KERAS.. This will build upon the fundamental understanding varying activation functions when a neural network learns and how the activation functions differ in computational complexity and the application from pen and paper, to code from scratch using Numpy and then using a high level module -> Keras.

- Note: The topology is the same as Lecture 1/Tutorial 1, but the weights and inputs are different, you can of course use the same weights.

- The students will be presented with four problems (the first being optional or as additional material):

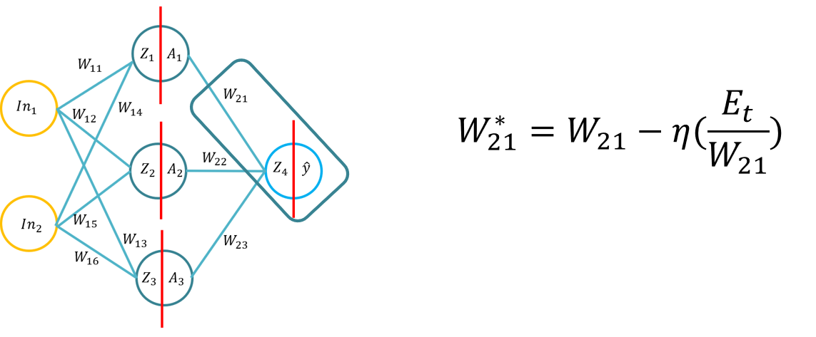

- Problem 1: The derivation of the backpropagation algorithm (using the Sigmoid function for the inner and outer activation functions and MSE as the loss function), students will be asked to derive the backpropagation formula (20 minutes to complete).

- Problem 2: Students will apply three activation functions for a single weight update (SGD backpropagation), using pen and paper for (20 Minutes):

- Sigmoid (Hidden layer), Sigmoid (Outer Layer) and MSE

- Tan H (Hidden layer), Sigmoid (Outer Layer) and MSE

- ReLu (Hidden layer), Sigmoid (Outer Layer) and MSE

- Problem 3: Students will be asked (with guidance depending on the prior coding experience) to develop a neural network from scratch using only the Numpy module, and the weights and activation functions where the option to select from any one hidden layer activation function is provided to update the weights using SGD (20 minutes to complete).

- Problem 4: Students will be asked (with guidance depending on the prior coding experience) to develop a neural network using the Tensorflow 2.X module with the inbuild Keras module, and the weights and activation functions, and then using random weights to complete one or several weight updates. Please not as Keras uses a slight different MSE loss, the loss reduces quicker in the Keras example.

- Keras MSE = loss = square(y_true - y_pred)

- Tutorial MSE = loss = (square(y_true - y_pred))*0.5

- The subgoals for these three Problems, is to get students to understand the backpropagation algorithm, apply it so that for hypermeter tuning, the students will be able to better understand hyperparameter effects.

Outline

| Duration (Min) | Description |

|---|---|

| 20 (Optional) | Problem 1: derivation of the backpropagation formula using the Sigmoid function for the inner and outer activation functions and MSE as the loss function (Optional) |

| 20 | Problem 2: Students will apply three activation functions for a single weight update (SGD backpropagation), using pen and paper for (20 Minutes): |

| 20 | Problem 3: Students will develop a neural network from scratch using only the Numpy module, where the user can select from any of three hidden layer activation functions where the code can preform backpropagation |

| 10 | Problem 4: Students will using the Tensorflow 2.X module with the inbuild Keras module, preform backpropagation using SGD. |

| 10 | Recap on the forward pass process |

Acknowledgements

The Human-Centered AI Masters programme was Co-Financed by the Connecting Europe Facility of the European Union Under Grant №CEF-TC-2020-1 Digital Skills 2020-EU-IA-0068.