Administrative Information

| Title | Forward propagation |

| Duration | 60 |

| Module | B |

| Lesson Type | Lecture |

| Focus | Technical - Deep Learning |

| Topic | Forward pass |

Keywords

Forward pass, Loss,

Learning Goals

- Understand the process of a forward pass

- Understand how to calculate a forward pass prediction, as well as loss unplugged

- Develop a forward pass using no modules in Python (other than Numpy)

- Develop a forward pass using Keras

Expected Preparation

Learning Events to be Completed Before

None.

Obligatory for Students

None.

Optional for Students

- Matrices multiplication

- Getting started with Numpy

- Knowledge of linear and logistic regression (from Period A Machine Learning : Lecture: Linear Regression, GLRs, GADs)

References and background for students

- John D Kelleher and Brain McNamee. (2018), Fundamentals of Machine Learning for Predictive Data Analytics, MIT Press.

- Michael Nielsen. (2015), Neural Networks and Deep Learning, 1. Determination press, San Francisco CA USA.

- Charu C. Aggarwal. (2018), Neural Networks and Deep Learning, 1. Springer

- Antonio Gulli,Sujit Pal. Deep Learning with Keras, Packt, [ISBN: 9781787128422].

Recommended for Teachers

None.

Lesson materials

Instructions for Teachers

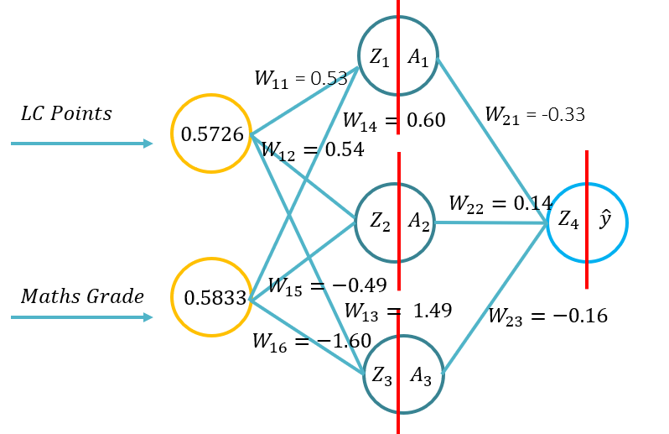

This lecture will introduce students to the fundamentals of forward propagation for an artificial neural network. This will introduce students to the topology (weights, synapses, activation functions and loss functions). Students will then be able to do a forward pass using pen and paper, using Python with only the Numpy library (for matrices manipulation) and then using KERAS as part of the tutorial associated with this LE. This will build fundamental understanding of what activation functions apply to specific problem contexts and how the activation functions differ in computational complexity. In the lecture the outer layer activation function and corresponding loss functions will be examined for use cases such as binomial classification, regression and multi-class classification.

- Overview of a neural network

- Definition of terms/components

- Weights and activation functions

- Loss functions, which one for which problem context

- Using matrices to conduct a forward pass

Note:

- Use of Sigmoid in the outer layer and MSE as the loss function.

- With tine limitations, a singular approach/topology/problem context was selected. Typically, one would start with regression for a forward pass (with MSE as the loss function), and for deriving backpropagation (thus having a linear activation function in the output layer, where this reduces the complexity of the derivation of the backpropagation function), Then one would typically move to a binary classification function, with sigmoid in the output layer, and a binary cross-entropy loss function. With time constraints this set of lectures will use three different example hidden activation functions, but will use a regression problem context. To add the complexity of a sigmoid activation function in the output layer, the regression problem used in the two first lectures of this set, the problem example is based on a normalised target value (0-1 based on a percentage grade problem 0-100%), thus sigmoid is used as an activation function in the output layer. This approach allows students to easily migrate between regression and binary classification problems, by simply only changing the loss function if a binary classification problem, or if a non-normalised regression problem is being used, the student simply removes the outer layer activation function.

- Core components are the application of, using a high level library, in this case KERAS via the TensorFlow 2.X library.

- Pen and paper are optional and only used to show the forward pass and backpropagation derivation and application (using the examples from the lecture slides).

- Python code without use of high level libraries, is used to show how simple a neural net (using the examples from the lecture slides). This also allows for discussion on fast numerical/matrices multiplication and introduce why we use GPUs/TPUs as an optional element.

- KERAS and TensorFlow 2.X are used and will be used for all future examples.

Outline

| Duration (Min) | Description |

|---|---|

| 10 | Definition of Neural Network Components |

| 15 | Weights and Activation functions (Sigmoid, TanH and ReLu) |

| 15 | Loss functions (Regression, binomial classification, and multi class activation) |

| 15 | Using matrices for a forward pass |

| 5 | Recap on the forward pass |

Acknowledgements

The Human-Centered AI Masters programme was Co-Financed by the Connecting Europe Facility of the European Union Under Grant â„–CEF-TC-2020-1 Digital Skills 2020-EU-IA-0068.